I just published the first version of my open source C# library named Dandraka.FuzzySubstringSearch in Github and Nuget.org.

FuzzySubstringSearch is intended to cover the following need: you need to know if a string (let’s call it Target) contains another string (let’s call it Searched). Obviously you can do this using String.Contains(). But if you need to account for spelling errors, this doesn’t work.

In this case, you need what is usually called “fuzzy” search. This concept goes like this: matching is not a yes or no question but a range.

– If the Target contains the Searched, correctly, we’re at one end of the range (say, 100%).

– If Target contains no part of Searched we’re at the other end (0%).

– And then we have cases somewhere in the middle. Like if you search inside “Peter stole my precius headphones” for the word “precious”. That should be more than 0 but less than 100, right?

Under this concept, we need a way to calculate this “matching percentage”. Obviously this is not new problem. It’s a problem Computer Science has faced since decades. And there are different algorithms for this, like the Levenshtein distance, Damerau–Levenshtein distance, the Jaccard index and others.

But the problem is, these algorithms compare similar strings. They don’t expect that the Target is much larger than Searched.

Enter N-grams. N-grams are, simply put, pieces of the strings (both Target and Searched). N refers to the size of the pieces: 2-grams means the pieces are always 2 characters, 3-grams means 3 characters etc. You break Target and Searched into pieces (the N-grams), check how many are matching and divide by how many pieces Searched has.

Let’s do an example: we’re searching inside “Peter stole my precius headphones” for “precious”.

Here’s how it goes. Let’s use 3-grams. Target has the following 3-grams:

| Pet | Peter stole my precius headphones |

| ete | Peter stole my precius headphones |

| ter | Peter stole my precius headphones |

| er(space) | Peter stole my precius headphones |

| r(space)s | Peter stole my precius headphones |

| (space)st | Peter stole my precius headphones |

| (etc etc) | (etc etc) |

| pre | Peter stole my precius headphones |

| rec | Peter stole my precius headphones |

| eci | Peter stole my precius headphones |

| ciu | Peter stole my precius headphones |

| ius | Peter stole my precius headphones |

| (etc etc) | (etc etc) |

And Searched has the following 6:

| pre | precious |

| rec | precious |

| eci | precious |

| cio | precious |

| iou | precious |

| ous | precious |

How many of the Searched 3-grams can you find in Target? The following 3: pre, rec, eci. So the percentage is 3 found / 6 total = 50%. And if you use 2-grams instead of 3-grams, the percentage increases to 71% since more 2-grams are matching. But, importantly, you “pay” this with more CPU time.

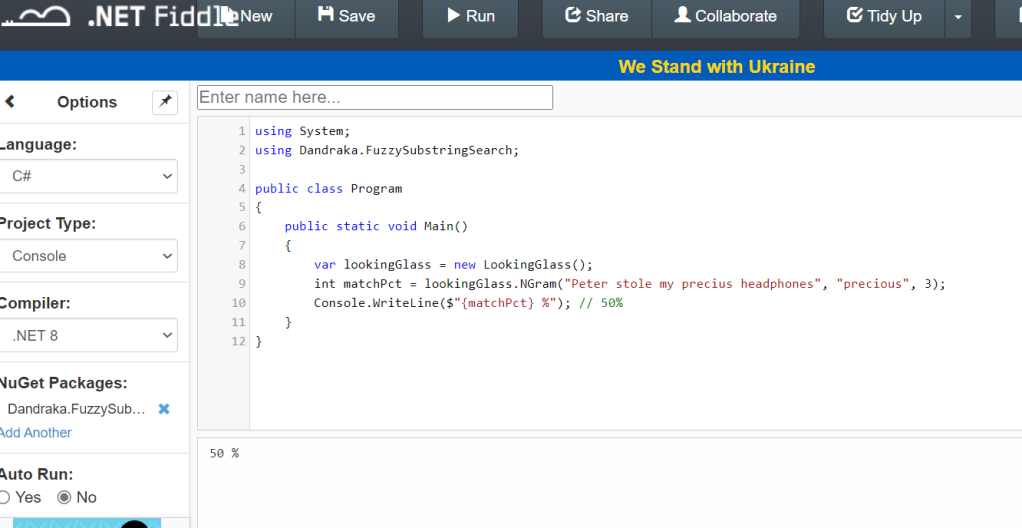

That’s exactly what the library calculates.

You can find a C# usage example in the Readme file and detailed developer’s documentation in the docs folder.

Enjoy 😊